When it comes to designing parts, it’s best not to reinvent the wheel.

Imagine, as an engineer, spending hours, days, or even months designing a part, only to find out later that it already exists. Unfortunately, it’s all too common, especially in machine building and manufacturing facilities that have been operating for decades. The problem is, a part designed 30 years ago is filed away in a cabinet somewhere as a 2D design. And, while this data can be digitized and stored online, it’s still not searchable — and there can be hundreds of thousands of drawings to sift through.

But an AI-powered technology, called Caddi Drawer, is ushering in a new era of searchable 2D data. The drawing management platform reads, analyzes, and stores 2D drawings and data and makes the content easily searchable and accessible by centralizing the drawings in a single location, regardless of format. The technology provides the ability to search historical drawings that have been archived using any keyword, including material, size, designer’s name, part name, or a note. It can even access and search handwritten drawings. This is welcome news for engineers who have been working in a space that has historically been paper-based.

“Some of the oldest drawings that we’ve brought into our system are from the 1920s,” says Chris Brown, Caddi’s vice president of sales. “It’s amazing. They were handwritten and our technology was able to read them and still digitize them into searchable data.

Led by Yushiro Kato, co-founder and CEO, Caddi has been operating in Japan for about six years, focusing on the automotive and construction equipment industries. In 2023, Caddi opened an office in Chicago to position it for the U.S. market. The expansion also includes reaching out to new market segments, including packaging OEMs.

“We’re realizing that there’s a lot of integrators and line builders that are in the packaging industry. [The companies] might only be 15 or 20 years old, but because there are so many parts and components, and every installation is completely customized, they’ve created a massive amount of drawings for themselves as well,” Brown explains. “And rather than have somebody sit there and search through all these drawings on a computer, we can enable them with a similarity search, based on the shape of parts and the environment that they’re installing these parts into.”

The AI filtering technology the company developed is cutting-edge. So much so, that the company recently closed $89 million in Series C funding, bringing its total funding to $164 million. Caddi has also been named one of Fast Company’s Most Innovative Companies in 2024.

To get a better idea of what Caddi Drawer is all about, Design World caught up with Brown at the PACK EXPO East show in Philadelphia to ask him a few more questions.

Q: Is Caddi Drawer a CAD system?

Chris Brown: So, we don’t provide any way to draw a part. We are not a CAD system. What we are doing is, we are digitizing 2D drawings, typically in a PDF format, using two pieces of artificial intelligence, one of which is a traditional OCR [optical character recognition]. So, we’re looking at the tolerance callouts, the general dimensioning, and the tolerancing specifications of a part. We’re looking at the dimensions of the part, or reading everything that’s in the title block or the notes section of a part, and making all of that searchable. Whereas before, you had to rely on either naming the file something that you could search, or using a product development management [PDM] system to categorize your parts and only being able to search by those categories.

We’re able to search for anything that’s on that drawing. So, for example, in the notes section, it says “integrates with part number XYZ,” that information wouldn’t be anywhere in any system that currently exists. And, let’s just say the first part was drawn in the 80s. Right? And no one has been at the company for the last five years that remembers that drawing. And some new company comes along and says, “I need something that integrates with component XYZ.” What would have happened is that they would have started an entirely new engineering development sprint to develop something to integrate with that. Now, they can go into Caddi and they can simply search “integrates with XYZ,” and it’ll find that on the drawing and pull that forward for them. They can do a quick review, and if it is exactly what they need, they don’t have to reinvent the wheel. Or, they can use that as a fast forward in their design and say, “Actually, I like 80% of this, so we’re going to start with this drawing, but we’re going to modify a few things here.” That saves 80% of the time that it takes to start that drawing and figure out how to start all of that engineering work.

Chris Brown, VP of Sales, Caddi Inc.

Q: So, it accelerates the design process. Does it also enable some level of collaboration between engineers?

Brown: Think more in terms of the collaboration that happens between engineering and procurement. The piece of AI technology I haven’t explained yet is actually the shape recognition AI that we built to go along with that text recognition AI. And so, what that shape recognition AI can do in a use case is, an engineer can get done drawing their part, let’s just say that it’s a bearing, right? They’ve got their drawing completely done out of AutoCAD, and they go to hand that into procurement. Maybe it’s a newer member of their procurement team. Maybe there was some institutional knowledge just lost by somebody leaving the organization and this new person doesn’t know who their three suppliers of bearings are, for example. And, and they don’t even really know what those parts should cost. And so that procurement person is typically going to go around the office and ask people, or they’re going to do a Google search, or they’re going to go on one of the online directories [to look at] all of the manufacturers to try to find somebody. Instead, they could take that drawing, they could load it into Caddi, and through our shape analysis and recognition, they can easily find all 100 bearings that the company already sources. And because we’re connected to ERP systems and procurement systems and quality management systems, they’ll be able to see who they’re sourcing them from, what the average cost per part is when sourcing those types of parts from that company, and they’ll be able to see their quality scores and their on-time delivery scores. Now, they can quickly and instantly analyze and say, “Oh, these are my three top performing companies for this particular part, based on the shape of this part, rather than just the description, and be able to go right out to quote to those companies already knowing about what it should cost. That way they can have a lot of checks and balances in place while feeling educated, even though the person who knew all this information had long since left the institution.

Q: How is all this information aggregated?

Brown: So, let’s just say we go into an organization that has 250,000 to half a million drawings. Those are typically behind either an AutoCAD Vault program or some level of a PDM program or even we’ve seen things like them just being stored on a Google Drive. And so, we’ll go in and we’ll transfer that entire Google Drive or that entire PDM folder over into our system. It’ll take about three to four days for our AI to consume all of that data, convert everything into metadata, and store that inside of our servers to make it instantly searchable.

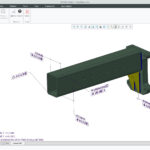

Caddi Drawer technology applies shape and text analysis to 2D drawings.

It’s a cloud-based solution. So, users, no matter where they are, as long as they’re given access through either a single sign-on situation or two-factor authentication, can access this. Imagine, for example, your maintenance and repair operators who are trying to fix the line and maintain the line and keep that line moving. Let’s just say that a bearing goes down, for example. They can have an iPad and have our web app running in the background of it. Rather than having to remember this is assembly XYZ or ABC, and having to go flip through all of those drawings to find what bearing it was and then go order it, they can just take a quick picture of it, bring it onto their iPad, add a couple of dimensions to it, and then search right from that picture and our system will pull forth all of the drawings that are in your system. If it’s connected to an inventory management system, for example, it could instantly tell you, “This part is a 99.9% match to the one that you just took a picture of. It’s on shelf 15, bin 20, go grab one and see if it’s the same part and try that.” In that event, it’s probably saving that person an hour and a half of just researching and trying to figure out what part just broke before they can go find it on the shelf.

Q: Why are you entering the packaging market? What is the opportunity you see here?

Brown: I see a lot of really, really, really old assembly lines, right? I see a lot of equipment that is wearing, and I see a lot of professionals that are getting close to retirement age. I also see a lot of young professionals who are afraid to get into the industry because not a lot of documentation has occurred about what’s happening on the shop floor and what’s happening in the engineering space. We’re bringing forth all of this institutional knowledge and making it searchable in a way that is very familiar to the younger generation. We like to call ourselves kind of a Google image search of 2d drawings. And that’s a very familiar process to somebody who’s just graduating with a packaging degree from college. Rather than [joining] a company and having to dig through all of that data, which they’re not going to have the time to do to meet the demands of the floor and the line, this is just a great way to help them enter into the market. And it’s a great way to preserve the 100 years’ worth of knowledge that has been created that otherwise would have gone by the wayside.

You may also like:

Filed Under: Digital manufacturing, Automotive, ENGINEERING SOFTWARE, Product design, Packaging, Uncategorized